This is the code snippet you will need to place into the console: var x = document.querySelectorAll("a") var myarray = for (var i=0 iNameLinks' for (var i=0 i'+ myarray + ''+myarray+'' } var w = window.open("") w.document.write(table) } make_table() This will open up the console, into which you can type or copy and paste snippets of code.

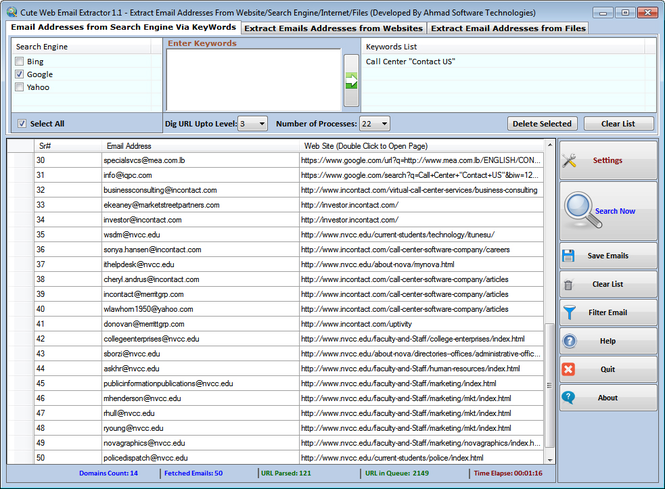

To open the developer console, you can either hit F12 or right-click the page and select “Inspect” or ”Inspect Element” depending on your browser of choice. Now we just need to open up the developer console and run the code. I’m using the Select Committee inquiries list from the 2017 Parliament page as an example - it is a page with a massive amount of links that, as a grouping, may be useful to a lot of people. Select Committee inquiries from the 2017 Parliament Open up your browser (yes, this even works in Internet Explorer if you’re a glutton for punishment) and navigate to the page from which you’d like to extract links. The bit of code I’ll be providing further down the page.Literally any browser made in the past 10 years.When this code runs, it opens a new tab in the browser and outputs a table containing the text of each hyperlink and the link itself, so there is some context to what each link is pointing to. However, this code would work equally well to extract any other text element types in HTML documents, with a few small changes. In this example, I am extracting all links from a web page, as this is a task I regularly perform on web pages. Regular old JavaScript is powerful enough to extract information from a single web page, and the JavaScript in question can be run in the browser’s developer console.

This is using a sledgehammer to crack a nut. Previously when a case like this arose, I would still fire up my Python IDE or RStudio, write and execute a script to extract this information. But sometimes a project only needs a small amount of data, from just one page on a website.

JAVASCRIPT URL EXTRACTOR HOW TO

Using the console to extract links from a web pageĮxtracting and cleaning data from websites and documents is my bread and butter and I have really enjoyed learning how to systematically extract data from multiple web pages and even multiple websites using Python and R.

0 kommentar(er)

0 kommentar(er)